Sophisticated statistics in medical research can be hard to translate to layman's terms, and when they are, these common misinterpretations can be downright misleading.

1. Odds ratios don't express relative risk.

In 1999, media reports resulting from a study published in the New England Journal of Medicine wrongly suggested that black patients and women were 40 percent less likely than white patients and men, respectively, to undergo cardiac catheterization.

The study's authors had used odds ratios to describe the significance of their findings—a common practice among statistically sophisticated researchers—which showed that black patients and women were definitely less likely to be referred for invasive procedures than white patients and men, but not how much less likely.

Far from being 40 percent less likely, as a misinterpretation of the 0.60 odds ratio suggested, the relative risk ratio was closer to 0.93, "an absolute difference in referral rates of about 5 percentage points," said Frank Davidoff, MD in a letter to the editor.

Editors of the journal soon published a reply taking responsibility for the "media's overinterpretation" of the results. "We should not have allowed the use of odds ratios in the Abstract," wrote the editors. "As Schwartz et al. point out, risk ratios would have been clearer to the readers."

Risk ratios are clearer, because they lend themselves to "direct intuitive interpretation," wrote William Holcomb, MD in a review of literature in Obstetrics & Gynecology. "If the risk ratio equals X, then the outcome is X-fold more likely to occur in the group with the factor compared with the group lacking the factor."

In other words, a risk ratio of 2.00 means the outcome is 2 times as likely to occur in a group with a particular characteristic compared to another group. A risk ratio of 1.07 means the outcome is 7 percent more likely to occur in the group it describes.

In the 1999 study about gender and race bias in referrals for cardiac catheterization, a risk ratio of 0.93 could be used to say black patients were 7 percent less likely to be referred than white patients. Odds ratios cannot be used to make similar statements.

2. Risk ratios don't express absolute differences.

Of course, using risk ratios to describe other ratios—such as rates of referral for cardiac catheterization—can also be misleading.

If black patients were 7 percent less likely to be referred, one might expect that to mean if white patients were referred 70 percent of the time, black patients were referred 63 percent of the time. 70 percent minus 7 percent equals 63 percent, right?

Wrong. It's easy to forget—with probabilities we have to multiply and divide, not add and subtract. Here's how the math actually works out: 70 percent times the risk ratio (0.93) equals 65.1 percent. This final result is in "absolute" terms—black patients were approximately 5 percent less likely to be referred for invasive cardiac procedures.

It seems strange that both statements are technically correct—black patients were both 7 percent less likely to be referred than white patients and 5 percent less likely to be referred than white patients in absolute terms—but it does remind us how statistics and probabilities can be used to mislead or sensationalize, especially when the gap between the risk ratio and absolute difference is much larger than 2 percent.

These mistakes are still relevant, today.

Last week, ACVP blog discussed a recent study demonstrating a risky drug interaction between two common statins and an anti-clotting drug.

This study also expressed its results in odds ratios, which we translated to a relative risk ratio. I was able to do this calculation using the method described by Zhang and Yu in the Journal of the American Medical Association in 1998.

The relative risk ratio ended up being pretty close to the odds ratio—approximately 1.42 compared to 1.46—which occurs when the prevalence of the outcome in absolute terms is relatively low.

When this happens, misinterpreting the odds ratio as a risk ratio makes little difference, but a particularly high risk ratio can be used to sensationalize a study's results:

Significantly, he suggested that Cohen's statement that dose monitoring could have reduced major bleeding by '30% to 40%, compared with well-controlled warfarin,' was misleading. 'If you made dabigatran perfectly safe (0 bleeds), the best it could have been was 3.36% better than warfarin,' Dr. Mandrola wrote. 'Dr. Cohen was talking about relative risk reduction, which is not important to the patient considering taking a drug.' (ACVP Blog)

John Mandrola, MD, on his blog, was calling out criticism of dabigatran for using relative risk ratios as opposed to absolute differences—from a decision-making standpoint, this can be misleading.

Indeed, in the study we discussed last week, the drug interaction increased the risk of a major hemorrhage by approximately 42 percent, a relative risk ratio. A 42 percent increase seems massively significant, but in absolute terms, this would have been a difference of less than 1 percent.

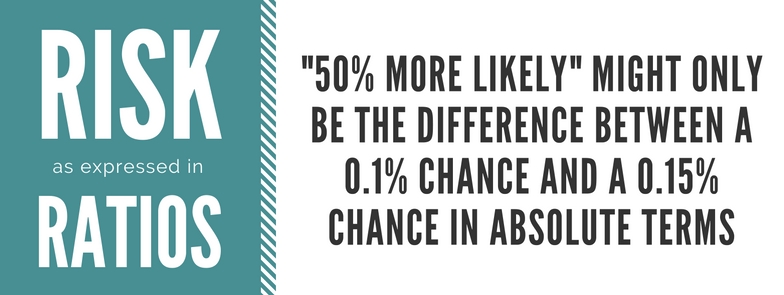

Taking this to a logical extreme, what a risk ratio can describe as "50 percent more likely" might only be the difference between a 0.1 percent chance and a 0.15 percent chance in absolute terms.

Appropriately, the authors' conclusion was far from sensational, saying, "Clinicians should consider avoiding" prescribing the drugs in combination with one another.

Of course, clinically and ethically, 1 percent can and should be considered significant—if it's just as easy to avoid the drug interaction, why wouldn't you?

But it's important to understand probabilities in absolute terms—the difference between a 2 percent chance of bleeding and a 3 percent chance of bleeding might be negligible if there are other important factors in the decision-making process.

Relative risk ratios might be useful to clinicians designing guidelines, but a patient considering their options might need information in absolute terms.